Motivated in part by questions raised through the AudioScape project, we were interested in interaction and control within a 3D environment. In particular, we wanted to develop an effective interface for object instantiation, position, view, and other parameter control, which moves beyond the limited (and often bewilderingly complex) keyboard and mouse devices, in particular within the context of performance. We divided the problem into a number of actions (or gestures that the user needs to perform), the choice of sensor (to acquire these input gestures), and appropriate feedback (to indicate to the user what has been recognized and/or performed).

As a starting point, the initial action we considered necessary for almost all others is that of object or target “selection”. In particular, we wanted to investigate the comparative performance benefits of graphical, auditory (e.g. a click or beep when a target is acquired), and haptic (e.g. a vibration

transduced through a Wii controller) feedback, either individually or in combination. Next, we wanted to consider the changing requirements as one moves from a 2D (conventional screen) display, to an immersive (multi-screen) display, to a stereoscopic (3D) display, and similarly, as audio evolves from a monophonic, to stereo, to a fully spatialized modality. Related questions dealt with target density and distance, in particular in the case of a 3D environment. We considered a large range of possible input devices, although to reduce complexity in the early stages, we confined ourselves to use of a Wiimote and a motion capture system.

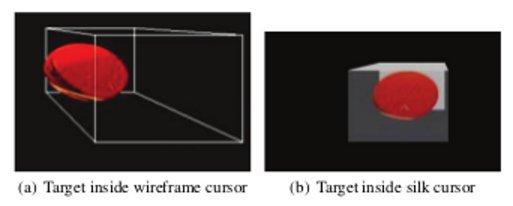

Subsequently, we conducted a number of experiments where participants were asked to use an augmented Wiimote to control the position of a volumetric cursor, and acquire targets as they appeared one by one on the screen. The goal of the inital round of trials in the 3D Interaction experiment was to expand on The Silk Cursor: Investigating transparency for 3D target acquisition , conducted by Shumin Zhai in 1994. In the original study, users participated in a “virtual fishing” experiment, and were asked to acquire targets as they appear on a screen using a volumetric cursor. In our experiment, we wanted to explore the result of supplementing the visual cues afforded by the volumetric cursor with additional feedback modalities to indicate that the target is completely contained within the cursor. Spherical targets and two types of cubic, volumetric cursors, silk and wireframe, were used.

A successful acquisition means that the target was completely contained within the cursor when the user pressed a button on the Wiimote to indicate that he or she would like to make a selection. The additional feedback was offered through audio, visual and haptic modalities. Cues were delivered either as discrete multimodal feedback given only when the target was completely contained within the cursor or continuously in proportion to the distance between the cursor and the target. Discrete feedback prevailed by improving accuracy without compromising selection times. Continuous feedback resulted in lower accuracy compared to discrete. In addition, reaction to the haptic stimulus was faster than for visual feedback. Finally, while the haptic modality helped decrease completion time, it led to a lower success rate.

Full results of the experiment can be found here.